1. Which DNS name can only be resolved within Amazon EC2?

- D. Private DNS name

- http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/vpc-dns.html

- An Amazon-provided private (internal) DNS hostname resolves to the private IPv4 address of the instance, and takes the form

ip-for the us-east-1 region, andprivate-ipv4-address.ec2.internalip-for other regions (whereprivate-ipv4-address.region.compute.internalprivate.ipv4.address

2. Is it possible to access your EBS snapshots?

- B.Yes, through the Amazon EC2 APIs.

- https://aws.amazon.com/ebs/faqs/?nc1=h_ls

- No, snapshots are only available through the Amazon EC2 API.

3. Can the string value of ‘Key’ be prefixed with laws?

- A.No

- C. Yes

- http://docs.aws.amazon.com/cli/latest/reference/rds/list-tags-for-resource.html

- Key -> (string)

A key is the required name of the tag. The string value can be from 1 to 128 Unicode characters in length and cannot be prefixed with “aws:” or “rds:”. The string can only contain only the set of Unicode letters, digits, white-space, ‘_’, ‘.’, ‘/’, ‘=’, ‘+’, ‘-‘ (Java regex: “^([\p{L}\p{Z}\p{N}_.:/=+\-]*)$”).

Value -> (string)

A value is the optional value of the tag. The string value can be from 1 to 256 Unicode characters in length and cannot be prefixed with “aws:” or “rds:”. The string can only contain only the set of Unicode letters, digits, white-space, ‘_’, ‘.’, ‘/’, ‘=’, ‘+’, ‘-‘ (Java regex: “^([\p{L}\p{Z}\p{N}_.:/=+\-]*)$”).

4. In the context of MySQL, version numbers are organized as MySQL version = X.Y.Z. What does X denote here?

- D. major version

- https://aws.amazon.com/rds/mysql/faqs/

- MySQL version = X.Y.Z X = Major version, Y = Release level, Z = Version number within release series.

5. Is decreasing the storage size of a DB Instance permitted?

- C.No

- You cannot decrease storage allocated for a DB instance

6. Select the correct set of steps for exposing the snapshot only to specific AWS accounts

- B.SelectPrivate, enter the IDs of those AWS accounts, and clickSave.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-modifying-snapshot-permissions.html

- expose the snapshot to only specific AWS accounts, choose Private, enter the ID of the AWS account (without hyphens) in the AWS Account Number field, and choose Add Permission. Repeat until you’ve added all the required AWS accounts.

7. Which Amazon storage do you think is the best for my database-style applications that frequently encounter many random reads and writes across the dataset?

- D. Amazon EBS

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AmazonEBS.html

- Amazon EBS is recommended when data must be quickly accessible and requires long-term persistence. EBS volumes are particularly well-suited for use as the primary storage for file systems, databases, or for any applications that require fine granular updates and access to raw, unformatted, block-level storage. Amazon EBS is well suited to both database-style applications that rely on random reads and writes, and to throughput-intensive applications that perform long, continuous reads and writes.

8. Does Route 53 support MX Records(Mail Exchange Record)?

- A. Yes.

- http://docs.aws.amazon.com/Route53/latest/DeveloperGuide/ResourceRecordTypes.html#MXFormat

- Each value for an MX resource record set actually contains two values:

- An integer that represents the priority for an email server

- The domain name of the email server

9. Does Amazon RDS for SQL Server currently support importing data into the msdb database?

- A. No

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/SQLServer.Procedural.Importing.html#SQLServer.Procedural.Importing.Procedure

- Amazon RDS for Microsoft SQL Server does not support importing data into the

msdbdatabase.

10. If your DB instance runs out of storage space or file system resources, its status will change to_____ and your DB Instance will no longer be available.

- B. storage-full

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_Troubleshooting.html

- If your database instance runs out of storage, its status will change to storage-full.

11. Is it possible to access your EBS snapshots?

- B. Yes, through the Amazon EC2 APIs.

- https://aws.amazon.com/ebs/faqs/?nc1=h_ls

- Q: Will I be able to access my snapshots using the regular Amazon S3 API?No, snapshots are only available through the Amazon EC2 API.

12. It is advised that you watch the Amazon CloudWatch “_____” metric (available via the AWS Management Console or Amazon Cloud Watch APIs) carefully and recreate the Read Replica should it fall behind due to replication errors.

- C. Replica La

- https://aws.amazon.com/rds/mysql/faqs/

- Q: Which storage engines are supported for use with Amazon RDS for MySQL Read Replicas?Amazon RDS for MySQL Read Replicas require a transactional storage engine and are only supported for the InnoDB storage engine. Non-transactional MySQL storage engines such as MyISAM might prevent Read Replicas from working as intended. However, if you still choose to use MyISAM with Read Replicas, we advise you to watch the Amazon CloudWatch “Replica Lag” metric (available via the AWS Management Console or Amazon CloudWatch APIs) carefully and recreate the Read Replica should it fall behind due to replication errors. The same considerations apply to the use of temporary tables and any other non-transactional engines.

13. By default what are ENIs(Elastic Network Interfaces) that are automatically created and attached to instances using the EC2 console set to do when the attached instance terminates?

- B. Terminate

- http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_ElasticNetworkInterfaces.html

- Changing Termination BehaviorBy default, network interfaces that are automatically created and attached to instances using the console are set to terminate when the instance terminates. However, network interfaces created using the command line interface aren’t set to terminate when the instance terminates.

14. You can use _____ and _____ to help secure the instances in your VPC.

- D. security groups and network ACLs

15. _____ is a durable, block-level storage volume that you can attach to a single, running Amazon EC2 instance.

- B. Amazon EBS

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSVolumes.html

- Amazon EBS volume is a durable, block-level storage device. By default, EBS volumes that are attached to a running instance automatically detach from the instance with their data intact when that instance is terminated.

16. If I want my instance to run on a single-tenant hardware, which value do I have to set the instance’s tenancy attribute to?

- A. dedicated

- https://aws.amazon.com/ec2/purchasing-options/dedicated-instances/

- Dedicated Instances are Amazon EC2 instances that run in a VPC on hardware that’s dedicated to a single customer. Your Dedicated instances are physically isolated at the host hardware level from instances that belong to other AWS accounts. Dedicated instances may share hardware with other instances from the same AWS account that are not Dedicated instances. Pay for Dedicated Instances On-Demand, save up to 70% by purchasing Reserved Instances, or save up to 90% by purchasing Spot Instances.

17. What does Amazon RDS stand for?

- B. Relational Database Service.

18. What does Amazon ELB stand for?

- D. Elastic Load Balancing.

19. Is there a limit to the number of groups you can have?

- C.Yes unless special permission granted

- http://docs.aws.amazon.com/IAM/latest/UserGuide/id_groups.html

- There’s a limit to the number of groups you can have, and a limit to how many groups a user can be in. For more information, see Limitations on IAM Entities and Objects.

- http://docs.aws.amazon.com/IAM/latest/UserGuide/reference_iam-limits.html

Groups in an AWS account 100 You can request to increase some of these quotas for your AWS account on the IAM Limit Increase Contact Us Form. Currently you can request to increase the limit on users per AWS account, groups per AWS account, roles per AWS account, instance profiles per AWS account, and server certificates per AWS account.

20. Location of Instances are ____________

- B. based on Availability Zone

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-regions-availability-zones.html#concepts-regions-availability-zones

- When you launch an instance, you can select an Availability Zone or let us choose one for you. If you distribute your instances across multiple Availability Zones and one instance fails, you can design your application so that an instance in another Availability Zone can handle requests.

21. Is there any way to own a direct connection to Amazon Web Services?

- D. Yes, it’s called Direct Connect.

- http://docs.aws.amazon.com/directconnect/latest/UserGuide/Welcome.html

- Create a connection in an AWS Direct Connect location to establish a network connection from your premises to an AWS region

- https://aws.amazon.com/directconnect/

- AWS Direct Connect makes it easy to establish a dedicated network connection from your premises to AWS

22. You must assign each server to at least _____________ security group

- C. 1

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-network-security.html

- Your AWS account automatically has a default security group per VPC and per region for EC2-Classic. If you don’t specify a security group when you launch an instance, the instance is automatically associated with the default security group.

23. Does DynamoDB support in-place atomic updates?

- C. Yes

- https://aws.amazon.com/dynamodb/faqs/

- Amazon DynamoDB supports fast in-place updates. You can increment or decrement a numeric attribute in a row using a single API call. Similarly, you can atomically add or remove to sets, lists, or maps. View our documentation for more information on atomic updates.

24. Is there a method in the IAM system to allow or deny access to a specific instance?

- C. No

- http://docs.aws.amazon.com/IAM/latest/UserGuide/IAM_UseCases.html

- There’s no method in the IAM system to allow or deny access to the operating system of a specific instance.

25. What does Amazon SES stand for?

- B. Simple Email Service

- https://aws.amazon.com/ses/

- Amazon Simple Email Service (Amazon SES) is a cost-effective email service

26. Amazon S3 doesn’t automatically give a user who creates _____ permission to perform other actions on that bucket or object.

- B. a bucket or object

- http://docs.aws.amazon.com/IAM/latest/UserGuide/IAM_UseCases.html

- Amazon S3 doesn’t automatically give a user who creates a bucket or object permission to perform other actions on that bucket or object. Therefore, in your IAM policies, you must explicitly give users permission to use the Amazon S3 resources they create.

27. Can I attach more than one policy to a particular entity?

- A. Yes always

- http://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies.html

- You can attach more than one policy to an entity

28. Fill in the blanks: A_____ is a storage device that moves data in sequences of bytes or bits (blocks). Hint: These devices support random access and generally use buffered I/O.

- D. block device

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/block-device-mapping-concepts.html

- A block device is a storage device that moves data in sequences of bytes or bits (blocks). These devices support random access and generally use buffered I/O.

29. Can I detach the primary (eth0) network interface when the instance is running or stopped?

- B. No. You cannot

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-eni.html

- You cannot detach a primary network interface from an instance.

30. What’s an ECU?

- D. Elastic Compute Unit.

- https://aws.amazon.com/ec2/faqs/

- EC2 Compute Unit (ECU)

31. REST or Query requests are HTTP or HTTPS requests that use an HTTP verb (such as GET or POST) and a parameter named Action or Operation that specifies the API you are calling.

- A. FALSE

- http://docs.aws.amazon.com/AWSEC2/latest/APIReference/Query-Requests.html

- Query requests are HTTP or HTTPS requests that use the HTTP verb GET or POST and a Query parameter named

Action

32. What is the charge for the data transfer incurred in replicating data between your primary and standby?

- A. No charge. It is free.

- https://aws.amazon.com/rds/faqs/?nc1=h_ls

- Data transfer – You are not charged for the data transfer incurred in replicating data between your primary and standby. Internet data transfer in and out of your DB instance is charged the same as with a standard deployment.

33. Does AWS Direct Connect allow you access to all Availabilities Zones within a Region?

- C. Yes

- https://aws.amazon.com/directconnect/faqs/

- Q. What Availability Zone(s) can I connect to via this connection?

Each AWS Direct Connect location enables connectivity to all Availability Zones within the geographically nearest AWS region.

34. How many types of block devices does Amazon EC2 support?

- C. 3

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/block-device-mapping-concepts.html

- Amazon EC2 supports two types of block devices:

- Instance store volumes (virtual devices whose underlying hardware is physically attached to the host computer for the instance)

- EBS volumes (remote storage devices)

35. What does the “Server Side Encryption” option on Amazon S3 provide?

- C. It encrypts the files that you send to Amazon S3, on the server side.

- https://docs.aws.amazon.com/AmazonS3/latest/dev/UsingServerSideEncryption.html

- Amazon S3 supports bucket policies that you can use if you require server-side encryption for all objects that are stored in your bucket.

36. Making your snapshot public shares all snapshot data with everyone. Can the snapshots with AWS Marketplace product codes be made public?

- Share Snapshot to public NOT POSSIBLE for encrypted snapshots or snapshots with AWS Marketplace product codes

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-modifying-snapshot-permissions.html#d0e80912

37. What does Amazon EBS stand for?

- D. Elastic Block Store

- https://aws.amazon.com/ebs/

- Amazon Elastic Block Store (EBS)

38.Within the IAM service a GROUP is regarded as a:

- D. A collection of users

- http://docs.aws.amazon.com/IAM/latest/UserGuide/id.html

- An IAM group is a collection of IAM users. You can use groups to specify permissions for a collection of users, which can make those permissions easier to manage for those users

39. A __________ is the concept of allowing (or disallowing) an entity such as a user, group, or role some type of access to one or more resources.

- D. permission

- http://docs.aws.amazon.com/IAM/latest/UserGuide/introduction_access-management.html

- Permissions are granted through policies that are created and then attached to users, groups, or roles.

40. Do the system resources on the Micro instance meet the recommended configuration for Oracle?

- B. Yes but only for certain situations

- https://acloud.guru/forums/aws-certified-solutions-architect-associate/discussion/-KGlCEGaR-JvcEQbg_g_/micro-instance-oracle-rds

- ‘We recommend that you use db.t1.micro instances with Oracle to test setup and connectivity only”

41. Will I be charged if the DB instance is idle?

- B.Yes

- https://aws.amazon.com/rds/faqs/

- Q: When does billing of my Amazon RDS DB instances begin and end? Billing commences for a DB instance as soon as the DB instance is available. Billing continues until the DB instance terminates, which would occur upon deletion or in the event of instance failure.

42. To help you manage your Amazon EC2 instances, images, and other Amazon EC2 resources, you can assign your own metadata to each resource in the form of____________

- C. tags

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/Using_Tags.html

- To help you manage your instances, images, and other Amazon EC2 resources, you can optionally assign your own metadata to each resource in the form of tags

43. True or False: When you add a rule to a DB security group, you do not need to specify port number or protocol.

- B. TRUE

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Overview.RDSSecurityGroups.html

DB Security Group VPC Security Group Controls access to DB instances outside a VPC Controls access to DB instances in VPC. Uses Amazon RDS APIs or Amazon RDS page of the AWS Management Console to create and manage group/rules Uses Amazon EC2 APIs or Amazon VPC page of the AWS Management Console to create and manage group/rules. When you add a rule to a group, you do not need to specify port number or protocol. When you add a rule to a group, you should specify the protocol as TCP, and specify the same port number that you used to create the DB instances (or Options) you plan to add as members to the group. Groups allow access from EC2 security groups in your AWS account or other accounts. Groups allow access from other VPC security groups in your VPC only.

44. Can I initiate a “forced failover” for my Oracle Multi-AZ DB Instance deployment?

- A. Yes

- https://aws.amazon.com/rds/faqs/#46

- Q: Can I initiate a “forced failover” for my Multi-AZ DB instance deployment?Amazon RDS will automatically failover without user intervention under a variety of failure conditions. In addition, Amazon RDS provides an option to initiate a failover when rebooting your instance. You can access this feature via the AWS Management Console or when using the RebootDBInstance API call.

45. Amazon EC2 provides a repository of public data sets that can be seamlessly integrated into AWS cloud-based applications.What is the monthly charge for using the public data sets?

- D. There is no charge for using the public data sets

- https://aws.amazon.com/public-datasets/

- AWS hosts a variety of public datasets that anyone can access for free.

46. In the Amazon RDS Oracle DB engine, the Database Diagnostic Pack and the Database Tuning Pack are only available with ______________

- C. Oracle Enterprise Edition

- https://aws.amazon.com/rds/faqs/#46

- Q: Which Enterprise Edition Options are supported on Amazon RDS?Following Enterprise Edition Options are currently supported under the BYOL model:

- Advanced Security (Transparent Data Encryption, Native Network Encryption)

- Partitioning

- Management Packs (Diagnostic, Tuning)

- Advanced Compression

- Total Recall

47. Amazon RDS supports SOAP only through __________.

- D. HTTPS

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/using-soap-api.html

- Amazon RDS supports SOAP only through HTTPS.

48. Without _____, you must either create multiple AWS accounts-each with its own billing and subscriptions to AWS products-or your employees must share the security credentials of a single AWS account.

- D. Amazon IAM

- http://docs.aws.amazon.com/IAM/latest/UserGuide/getting-setup.html

- Without IAM, however, you must either create multiple AWS accounts—each with its own billing and subscriptions to AWS products—or your employees must share the security credentials of a single AWS account.

49. The Amazon EC2 web service can be accessed using the _____ web services messaging protocol. This interface is described by a Web Services Description Language (WSDL) document.

- A. SOAP

50. HTTP Query-based requests are HTTP requests that use the HTTP verb GET or POST and a Query parameter named_____________.

- A. Action

51. Amazon RDS creates an SSL certificate and installs the certificate on the DB Instance when Amazon RDS provisions the instance. These certificates are signed by a certificate authority. The _____ is stored at https://rds.amazonaws.com/doc/rds-ssl-ca-cert.pem.

- C. public key

- https://aws.amazon.com/blogs/aws/amazon-rds-sql-server-ssl-support/

- Enabling SSL Support

Here’s all you need to do to enable SSL Support: Download a public certificate key from RDS at https://rds.amazonaws.com/doc/rds-ssl-ca-cert.pem

52. What is the name of licensing model in which I can use your existing Oracle Database licenses to run Oracle deployments on Amazon RDS?

- A. Bring Your Own License

- https://aws.amazon.com/oracle/

-

Oracle customers can now license Oracle Database 12c, Oracle Fusion Middleware, and Oracle Enterprise Manager to run in the AWS cloud computing environment. Oracle customers can also use their existing Oracle software licenses on Amazon EC2 with no additional license fees. So, whether you’re a long-time Oracle customer or a new user, AWS can get you started quickly.

53. ____________ embodies the “share-nothing” architecture and essentially involves breaking a large database into several smaller databases. Common ways to split a database include 1) splitting tables that are not joined in the same query onto different hosts or 2) duplicating a table across multiple hosts and then using a hashing

algorithm to determine which host receives a given update.

- A. Sharding

- https://forums.aws.amazon.com/thread.jspa?messageID=203052

- ShardingSharding embodies the “share-nothing” architecture and essentially just involves breaking a larger database up into smaller databases. Common ways to split a database are:

54. When you resize the Amazon RDS DB instance, Amazon RDS will perform the upgrade during the next maintenance window. If you want the upgrade to be performed now, rather than waiting for the maintenance window, specify the _____ option.

- D. ApplyImmediately

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Overview.DBInstance.Modifying.html

- To apply changes immediately, you select the Apply Immediately option in the AWS Management Console

55. Does Amazon Route 53 support NS Records?

- A. Yes, it supports Name Service records.

- https://aws.amazon.com/route53/faqs/

- Q. Which DNS record types does Amazon Route 53 support? Amazon Route 53 currently supports the following DNS record types:

- A (address record)

- AAAA (IPv6 address record)

- CNAME (canonical name record)

- MX (mail exchange record)

- NAPTR (name authority pointer record)

- NS (name server record)

- PTR (pointer record)

- SOA (start of authority record)

- SPF (sender policy framework)

- SRV (service locator)

- TXT (text record)

56. The SQL Server _____ feature is an efficient means of copying data from a source database to your DB Instance. It writes the data that you specify to a data file, such as an ASCII file.

- A. bulk copy

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/SQLServer.Procedural.Importing.html

Bulk Copy

The SQL Server bulk copy feature is an efficient means of copying data from a source database to your DB instance. Bulk copy writes the data that you specify to a data file, such as an ASCII file. You can then run bulk copy again to write the contents of the file to the destination DB instance.

57. When using consolidated billing there are two account types. What are they?

- A. Paying account and Linked account

- http://docs.aws.amazon.com/awsaccountbilling/latest/aboutv2/consolidated-billing.html

- You sign up for Consolidated Billing in the AWS Billing and Cost Management console, and designate your account as a payer account. Now your account can pay the charges of the other accounts, which are called linked accounts. The payer account and the accounts linked to it are called a Consolidated Billing account family.

58. A __________ is a document that provides a formal statement of one or more permissions.

- A. policy

- http://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies.html

- A policy consists of one or more statements, each of which describes one set of permissions

59. In the Amazon RDS which uses the SQL Server engine, what is the maximum size for a Microsoft SQL Server DB Instance with SQL Server Express edition?

- 4 TB per DB

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_SQLServer.html

- The minimum storage size for a SQL Server DB instance is 20 GB for the Express and Web Editions, and 200 GB for the Standard and Enterprise Editions.The maximum storage size for a SQL Server DB instance is 4 TB for the Enterprise, Standard, and Web editions, and 300 GB for the Express edition.

60. Regarding the attaching of ENI to an instance, what does ‘warm attach’ refer to?

- A. Attaching an ENI to an instance when it is stopped.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-eni.html#best-practices-for-configuring-network-interfaces

- You can attach a network interface to an instance

- when it’s running (hot attach),

- when it’s stopped (warm attach), or

- when the instance is being launched (cold attach).

61. If I scale the storage capacity provisioned to my DB Instance by mid of a billing month, how will I be charged?

- B. On a proration basis

- https://aws.amazon.com/rds/faqs/#15

- Q: How will I be charged and billed for my use of Amazon RDS?You pay only for what you use, and there are no minimum or setup fees. You are billed based on:

- Storage (per GB per month) – Storage capacity you have provisioned to your DB instance. If you scale your provisioned storage capacity within the month, your bill will be pro-rated.

62. You can modify the backup retention period; valid values are 0 (for no backup retention) to a maximum of ___________ days.

- B. 35

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_WorkingWithAutomatedBackups.html

- You can set the backup retention period to between 1 and 35 days.

63. A Provisioned IOPS volume must be at least __________ GB in size

- D. 10

- This question is deprecated. the new limits are as follows:

https://aws.amazon.com/ebs/details/

But the answer is D. - 4 GB – 16 TB

64. Will I be alerted when automatic failover occurs?

- C. Yes (For RDS)

- A. Only if SNS is configured (for others)

- https://aws.amazon.com/rds/faqs/

- Q: Will I be alerted when automatic failover occurs?

- Yes, Amazon RDS will emit a DB instance event to inform you that automatic failover occurred. You can click the “Events” section of the Amazon RDS Console or use the DescribeEvents API to return information about events related to your DB instance. You can also use Amazon RDS Event Notifications to be notified when specific DB events occur.

65. How can an EBS volume that is currently attached to an EC2 instance be migrated from one Availability Zone to another?

- C. Create a snapshot of the volume, and create a new volume from the snapshot in the other AZ.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSVolumes.html

- These snapshots can be used to create multiple new EBS volumes or move volumes across Availability Zones.

66. If you’re unable to connect via SSH to your EC2 instance, which of the following should you check and possibly correct to restore connectivity?

- D. Adjust the instance’s Security Group to permit ingress traffic over port 22 from your IP.

- http://docs.aws.amazon.com/cli/latest/reference/ec2/authorize-security-group-ingress.html

- To add a rule that allows inbound SSH trafficThis example enables inbound traffic on TCP port 22 (SSH). If the command succeeds, no output is returned.

- [EC2-VPC] To add a rule that allows inbound SSH trafficThis example enables inbound traffic on TCP port 22 (SSH). Note that you can’t reference a security group for EC2-VPC by name. If the command succeeds, no output is returned.

67. Which of the following features ensures even distribution of traffic to Amazon EC2 instances in multiple Availability Zones registered with a load balancer?

- A.Elastic Load Balancing request routing

- B.An Amazon Route 53 weighted routing policy

- C.Elastic Load Balancing cross-zone load balancing

- D.An Amazon Route 53 latency routing policy

- http://docs.aws.amazon.com/elasticloadbalancing/latest/userguide/how-elastic-load-balancing-works.html

- Cross-zone load balancing is always enabled for an Application Load Balancer and is disabled by default for a Classic Load Balancer. If cross-zone load balancing is enabled, the load balancer distributes traffic evenly across all registered instances in all enabled Availability Zones.

68. You are using an m1.small EC2 Instance with one 300 GB EBS volume to host a relational database. You determined that write throughput to the database needs to be increased. Which of the following approaches can help achieve this? Choose 2 answers

- A.Use an array of EBS volumes.

- B.Enable Multi-AZ mode.

- C.Place the instance in an Auto Scaling Groups

- D.Add an EBS volume and place into RAID 5.

- E.Increase the size of the EC2 Instance.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/raid-config.html

- Creating a RAID 0 array allows you to achieve a higher level of performance for a file system than you can provision on a single Amazon EBS volume. A RAID 1 array offers a “mirror” of your data for extra redundancy. Before you perform this procedure, you need to decide how large your RAID array should be and how many IOPS you want to provision.

69. After launching an instance that you intend to serve as a NAT (Network Address Translation) device in a public subnet you modify your route tables to have the NAT device be the target of internet bound traffic of your private subnet. When you try and make an outbound connection to the internet from an instance in the private subnet, you are not successful. Which of the following steps could resolve the issue?

- A. Disabling the Source/Destination Check attribute on the NAT instance

- http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_NAT_Instance.html#EIP_Disable_SrcDestCheck

- Each EC2 instance performs source/destination checks by default. This means that the instance must be the source or destination of any traffic it sends or receives. However, a NAT instance must be able to send and receive traffic when the source or destination is not itself. Therefore, you must disable source/destination checks on the NAT instance.

70. You are building a solution for a customer to extend their on-premises data center to AWS. The customer requires a 50-Mbps dedicated and private connection to their VPC. Which AWS product or feature satisfies this requirement?

- C. AWS Direct Connect

- https://aws.amazon.com/directconnect/faqs/

- Q. What connection speeds are supported by AWS Direct Connect?

1Gbps and 10Gbps ports are available.Speeds of 50Mbps, 100Mbps, 200Mbps, 300Mbps, 400Mbps, and 500Mbps can be ordered from any APN partners supporting AWS Direct Connect. Read more about APN Partners supporting AWS Direct Connect.

71. When using the following AWS services, which should be implemented in multiple Availability Zones for high availability solutions? Choose 2 answers

- B. Amazon Elastic Compute Cloud (EC2)

- C.Amazon Elastic Load Balancing

72. You have a video transcoding application running on Amazon EC2. Each instance polls a queue to find out which video should be transcoded, and then runs a transcoding process. If this process is interrupted, the video will be transcoded by another instance based on the queuing system. You have a large backlog of videos which need to be transcoded and would like to reduce this backlog by adding more instances. You will need these instances only until the backlog is reduced. Which type of Amazon EC2 instances should you use to reduce the backlog in the most cost efficient way?

- B. Spot instances

- https://aws.amazon.com/ec2/faqs/

- https://aws.amazon.com/ec2/pricing/

- Pricing :

- On-Demand instances: you pay for compute capacity by the hour with no long-term commitments or upfront payments.

- Spot instances: you bid on spare Amazon EC2 computing capacity for up to 90% off the On-Demand price.

- Reserved Instances: you with a significant discount (up to 75%) compared to On-Demand instance pricing.

- Dedicated Host: Can be purchased as a Reservation for up to 70% off the On-Demand price.

- On-Demande for :

- Users that prefer the low cost and flexibility of Amazon EC2 without any up-front payment or long-term commitment

- Applications with short-term, spiky, or unpredictable workloads that cannot be interrupted

- Applications being developed or tested on Amazon EC2 for the first time

- Spot for :

- flexible start and end times,

- only feasible at very low compute prices

- Users with urgent computing needs for large amounts of additional capacity

- Reserved for :

- steady state usage

- require reserved capacity

- Customers that can commit to using EC2 over a 1 or 3 year term to reduce their total computing costs

- On-Demande for :

- Can be purchased On-Demand (hourly).

- Can be purchased as a Reservation for up to 70% off the On-Demand price.

73. You have an EC2 Security Group with several running EC2 instances. You change the Security Group rules to allow inbound traffic on a new port and protocol, and launch several new instances in the same Security Group. The new rules apply:

- A. Immediately to all instances in the security group.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-network-security.html#security-group-rules

- You can add and remove rules at any time. Your changes are automatically applied to the instances associated with the security group after a short period.

74. Which services allow the customer to retain full administrative privileges of the underlying EC2 instances? Choose 2 answers

- B. Amazon Elastic Map Reduce

- E. AWS Elastic Beanstalk

- This question should be check if EC2 is visible in each AWS services :

- RDS : There’s no underlying EC2, but DB instances instead

- Amazon ElastiCache: There’s no underlying EC2

- Amazon DynamoDB: No underlying EC2, EC2 are used to replica or other purpose without administrative permission needs

- EMR : Q: What is Amazon EMR?Amazon EMR is a web service that enables businesses, researchers, data analysts, and developers to easily and cost-effectively process vast amounts of data. It utilizes a hosted Hadoop framework running on the web-scale infrastructure of Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Simple Storage Service (Amazon S3).

- Elastic BeanStalk: Q: What are the Cloud resources powering my AWS Elastic Beanstalk application?AWS Elastic Beanstalk uses proven AWS features and services, such as Amazon EC2, Amazon RDS, Elastic Load Balancing, Auto Scaling, Amazon S3, and Amazon SNS, to create an environment that runs your application. The current version of AWS Elastic Beanstalk uses the Amazon Linux AMI or the Windows Server 2012 R2 AMI.

75. A company is building a two-tier web application to serve dynamic transaction-based content. The data tier is leveraging an Online Transactional Processing (OLTP) database. What services should you leverage to enable an elastic and scalable web tier?

- A. Elastic Load Balancing, Amazon EC2, and Auto Scaling

- This is about scaling the web tier and no other tier.

What services should you leverage to enable an elastic and scalable web tier? - Exam taking tip, when you see answers that are both correct. There is something in the question that points to one.

The Answer is in the question.

76. Your application provides data transformation services. Files containing data to be transformed are first uploaded to Amazon S3 and then transformed by a fleet of spot EC2 instances. Files submitted by your premium customers must be transformed with the highest priority. How should you implement such a system?

- C. Use two SQS queues, one for high priority messages, the other for default priority. Transformation instances first poll the high priority queue; if there is no message, they poll the default priority queue.

- https://aws.amazon.com/sqs/details/

- Priority: Use separate queues to provide prioritization of work.

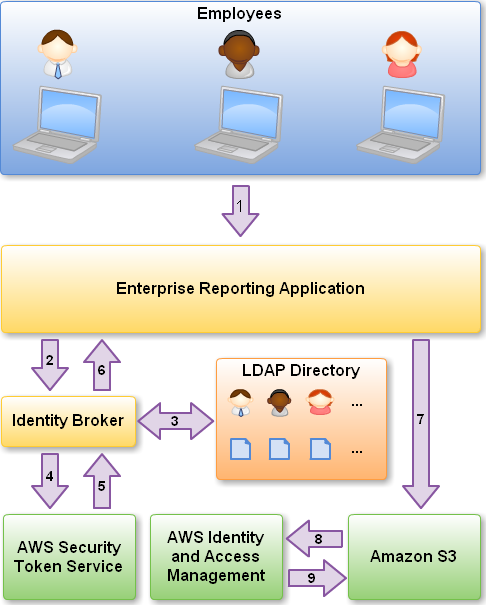

77. Which technique can be used to integrate AWS IAM (Identity and Access Management) with an on-premise LDAP (Lightweight Directory Access Protocol) directory service?

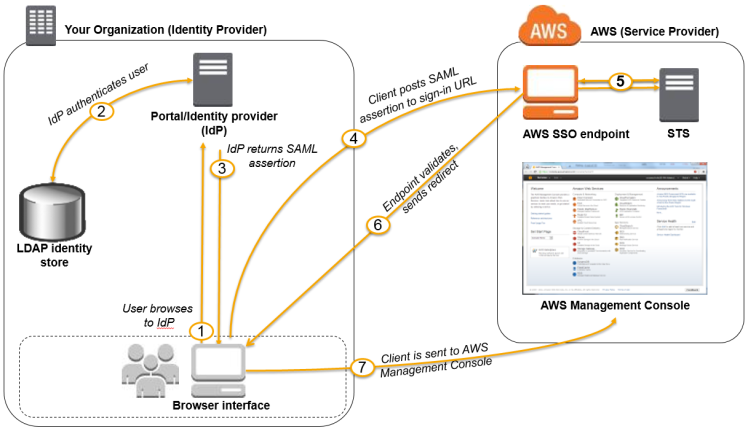

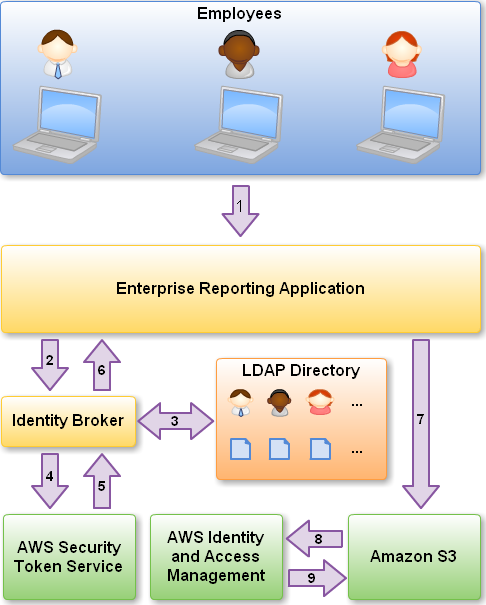

- B. Use SAML (Security Assertion Markup Language) to enable single sign-on between AWS and LDAP.

- C. Use AWS Security Token Service from an identity broker to issue short-lived AWS credentials.

78. Which of the following are characteristics of Amazon VPC subnets? Choose 2 answers

- B. Each subnet maps to a single Availability Zone.

- C. CIDR block mask of/25 is the smallest range supported.

- D. By default, all subnets can route between each other, whether they are private or public.

- B: RIGHT. Each subnet must reside entirely within one Availability Zone and cannot span zones http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_Subnets.html

- C: WRONG. You can assign a single CIDR block to a VPC. The allowed block size is between a

/16netmask and/28netmask from http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_Subnets.html

79. A customer is leveraging Amazon Simple Storage Service in eu-west-1 to store static content for a web-based property. The customer is storing objects using the Standard Storage class. Where are the customers objects replicated?

- C. Multiple facilities in eu-west-1

- facilities 设备

- http://docs.aws.amazon.com/AmazonS3/latest/dev/Introduction.html#Regions

- Amazon S3 achieves high availability by replicating data across multiple servers within Amazon’s data centers.

80. Your web application front end consists of multiple EC2 instances behind an Elastic Load Balancer. You configured ELB to perform health checks on these EC2 instances, if an instance fails to pass health checks, which statement will be true?

- D. The ELB stops sending traffic to the instance that failed its health check.

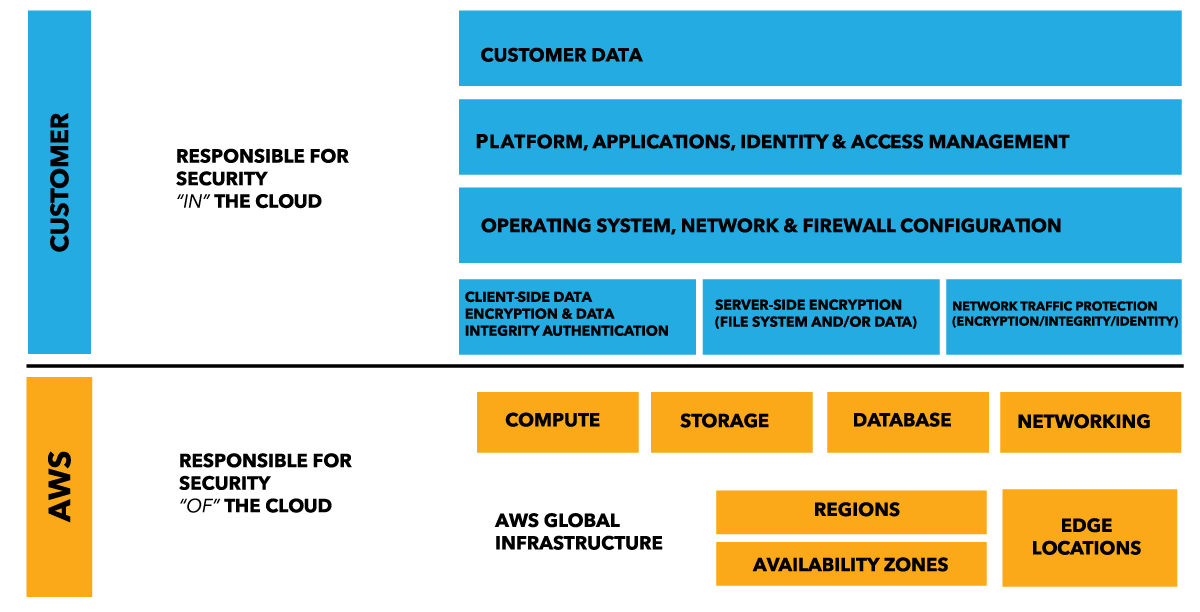

81. In AWS, which security aspects are the customer’s responsibility? Choose 4 answers (Important Again Again yizhe)

- A. Security Group and ACL (Access Control List) settings

- C. Patch management on the EC2 instance’s operating system

- D. Life-cycle management of IAM credentials

- F. Encryption of EBS (Elastic Block Storage) volumes

- https://aws.amazon.com/compliance/shared-responsibility-model/

82. You have a web application running on six Amazon EC2 instances, consuming about 45% of resources on each instance. You are using auto-scaling to make sure that six instances are running at all times. The number of requests this application processes is consistent and does not experience spikes. The application is critical to your business and you want high availability at all times. You want the load to be distributed evenly between all instances. You also want to use the same Amazon Machine Image (AMI) for all instances. Which of the following architectural choices should you make?

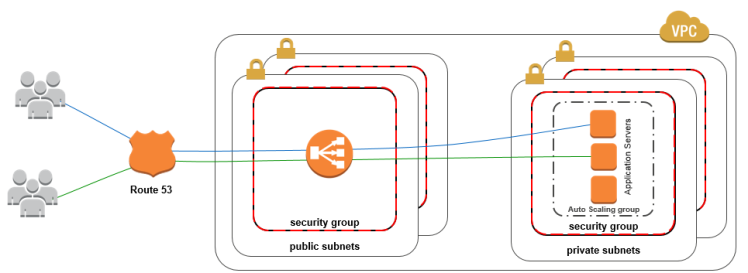

- C. Deploy 3 EC2 instances in one availability zone and 3 in another availability zone and use Amazon Elastic Load Balancer.

- C is correct. If one AZ fail, then you have 3 instances left on other AZ which translates to 50% capacity. Since the current utilisation is at 45%, in the event of AZ failure you are still able to service the full resources required by your web application. Since there is no autoscaling configured, you then manually replace the failed instances as you would to get the website back to its normal capacity.

89. You have decided to change the instance type for instances running in your application tier that is using Auto Scaling. In which area below would you change the instance type definition?

- D. Auto Scaling launch configuration

- http://docs.aws.amazon.com/autoscaling/latest/userguide/LaunchConfiguration.html

- if you want to change the launch configuration for your Auto Scaling group, you must create a launch configuration and then update your Auto Scaling group with the new launch configuration. When you change the launch configuration for your Auto Scaling group, any new instances are launched using the new configuration parameters, but existing instances are not affected.

90. When an EC2 EBS-backed (EBS root) instance is stopped, what happens to the data on any ephemeral store volumes?

- C. Data will be deleted and will no longer be accessible.

- http://www.n2ws.com/how-to-guides/ephemeral-storage-on-ebs-volume.html

- About Emphemeral : Ephemeral storage is the volatile temporary storage attached to your instances which is only present during the running lifetime of the instance. In the case that the instance is stopped or terminated or underlying hardware faces an issue, any data stored on ephemeral storage would be lost. This storage is part of the disk attached to the instance. It turns out to be a fast performing storage solution, but a non persistent one, when compared to EBS backup volumes.

- To be remember: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSVolumes.html

- If you are using an EBS-backed instance, you can stop and restart that instance without affecting the data stored in the attached volume. The volume remains attached throughout the stop-start cycle. This enables you to process and store the data on your volume indefinitely, only using the processing and storage resources when required. The data persists on the volume until the volume is deleted explicitly. The physical block storage used by deleted EBS volumes is overwritten with zeroes before it is allocated to another account.

91. Which of the following items are required to allow an application deployed on an EC2 instance to write data to a DynamoDB table? Assume that no security keys are allowed to be stored on the EC2 instance. (Choose 2 answers)

- A. Create an IAM Role that allows write access to the DynamoDB table.

- E. Launch an EC2 Instance with the IAM Role included in the launch configuration.

92. When you put objects in Amazon S3, what is the indication that an object was successfully stored?

- A. A HTTP 200 result code and MD5 checksum, taken together, indicate that the operation was successful.

- http://docs.aws.amazon.com/AmazonS3/latest/API/RESTObjectPOST.html

- if you receive a successful response, you can be confident the entire object was stored.

93. What is one key difference between an Amazon EBS-backed and an instance-store backed instance?

- A. Amazon EBS-backed instances can be stopped and restarted.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/Stop_Start.html

- You can start and stop your Amazon EBS-backed instance using the console or the command line.

- When you stop an instance, the data on any instance store volumes is erased. Therefore, if you have any data on instance store volumes that you want to keep, be sure to back it up to persistent storage.

94. A company wants to implement their website in a virtual private cloud (VPC). The web tier will use an Auto Scaling group across multiple Availability Zones (AZs). The database will use Multi-AZ RDS MySQL and should not be publicly accessible. What is the minimum number of subnets that need to be configured in the VPC?

- B. 2

- D. 4

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_VPC.WorkingWithRDSInstanceinaVPC.html

- When you launch a DB instance inside a VPC, you can designate whether the DB instance you create has a DNS that resolves to a public IP address by using the PubliclyAccessible parameter. This parameter lets you designate whether there is public access to the DB instance. Note that access to the DB instance is ultimately controlled by the security group it uses, and that public access is not permitted if the security group assigned to the DB instance does not permit it.

- yizhe以我认为,2 Public Subnets in VPC but the security group of DB Instance should be set to private cidre.

95. You have launched an Amazon Elastic Compute Cloud (EC2) instance into a public subnet with a primary private IP address assigned, an internet gateway is attached to the VPC, and the public route table is configured to send all Internet-based traffic to the Internet gateway. The instance security group is set to allow all outbound traffic but cannot access the internet. Why is the Internet unreachable from this instance?

- A. The instance does not have a public IP address.

- https://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_Internet_Gateway.html

- To enable communication over the Internet for IPv4, your instance must have a public IPv4 address or an Elastic IP address that’s associated with a private IPv4 address on your instance. Your instance is only aware of the private (internal) IP address space defined within the VPC and subnet. The Internet gateway logically provides the one-to-one NAT on behalf of your instance, so that when traffic leaves your VPC subnet and goes to the Internet, the reply address field is set to the public IPv4 address or Elastic IP address of your instance, and not its private IP address. Conversely, traffic that’s destined for the public IPv4 address or Elastic IP address of your instance has its destination address translated into the instance’s private IPv4 address before the traffic is delivered to the VPC.

96. You launch an Amazon EC2 instance without an assigned AWS identity and Access Management (IAM) role. Later, you decide that the instance should be running with an IAM role. Which action must you take in order to have a running Amazon EC2 instance with an IAM role assigned to it?

- D. Create an image of the instance, and use this image to launch a new instance with the desired IAM role assigned.

- Creating image is for keeping context of instances. But now, AWS allows to attache role to a running EC2 instance

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/iam-roles-for-amazon-ec2.html

- Specify the role when you launch your instance, or attach the role to a running or stopped instance

97. How can the domain’s zone apex, for example, “myzoneapexdomain.com”, be pointed towards an Elastic Load Balancer?

- A. By using an Amazon Route 53 Alias record

- http://docs.aws.amazon.com/govcloud-us/latest/UserGuide/setting-up-route53-zoneapex-elb.html

- Amazon Route 53 supports the alias resource record set, which lets you map your zone apex (e.g. example.com) DNS name to your load balancer DNS name.

- The record A specifies IPv4 address for given host.

- The record AAAA (also quad-A record) specifies IPv6 address

- The CNAME record specifies a domain name that has to be queried in order to resolve the original DNS query. Therefore CNAME records are used for creating aliases of domain names. CNAME records are truly useful when we want to alias our domain to an external domain. In other cases we can remove CNAME records and replace them with A records and even decrease performance overhead.

- More info : http://docs.aws.amazon.com/Route53/latest/DeveloperGuide/resource-record-sets-choosing-alias-non-alias.html

98. An instance is launched into a VPC subnet with the network ACL configured to allow all inbound traffic and deny all outbound traffic. The instance’s security group is configured to allow SSH from any IP address and deny all outbound traffic. What changes need to be made to allow SSH access to the instance?

- B. The outbound network ACL needs to be modified to allow outbound traffic.

- http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/VPC_ACLs.html

- ACL is stateless.

- Network ACLs are stateless; responses to allowed inbound traffic are subject to the rules for outbound traffic (and vice versa).

99. For which of the following use cases are Simple Workflow Service (SWF) and Amazon EC2 an appropriate solution? Choose 2 answers

- B. Managing a multi-step and multi-decision checkout process of an e-commerce website

- C. Orchestrating the execution of distributed and auditable business processes

- http://www.aiotestking.com/amazon/which-of-the-following-use-cases-are-simple-workflow-service-swf-and-amazon-ec2-an-appropriate-solution/

- A. Using as an endpoint to collect thousands of data points per hour from a distributed fleet of sensors

- This is far more applicable scenario for a Kinesis stream. Have the sensors send data into the stream, then process out of the stream (e.g. with a Lambda function to upload to DynamoDb for further analysis, or into CloudWatch if you just wanted to plot the data from the sensors as a time series).

- B. Managing a multi-step and multi-decision checkout process of an e-commerce website

- Ideal scenario for SWF. Track the progress of the checkout process as it proceeds through the multiple steps.

- C. Orchestrating the execution of distributed and auditable business processes

- Also good for SWF. The key words in the question are “process” and “distributed”. If you’ve got multiple components involved the process, and you need to keep them all appraised of what the current state/stage in the process is, SWF can help.

- D. Using as an SNS (Simple Notification Service) endpoint to trigger execution of video transcoding jobs

- This is a potential scenario for Lambda, which can take an SNS notification as a triggering event. Lambda kicks off the transcoding job (or drops the piece of work into an SQS queue that workers pull from to kick off the transcoding job)

- E. Using as a distributed session store for your web application

- Not applicable for SWF at all. As for how you might want to do this, key word here is “distributed”. If you wanted to store session state data for a web session on a single web server, just throw it into scratch space on the instance (e.g. ephmeral/instance-store drive mounted to the instance). But this is “distributed”, meaning multiple web instances are in play. If one instance fails, you want session state to still be maintained when the user’s traffic traverses a different web server. (It wouldn’t be acceptable for them to have two items in their shopping cart, be ready to check out, have the instance they were on fail, their traffic go to another web instance, and their shopping cart suddenly shows up as empty.) So you save their session state off to an external session store. If the session state only needs to be maintained for, say, 24 hours, ElastiCache is a good solution. If the session state needs to be maintained for a long period of time, store it in DynamoDb.

- https://aws.amazon.com/swf/faqs/

- Amazon SWF enables applications for a range of use cases, including media processing, web application back-ends, business process workflows, and analytics pipelines, to be designed as a coordination of tasks.

100. A customer wants to leverage Amazon Simple Storage Service (S3) and Amazon Glacier as part of their backup and archive infrastructure. The customer plans to use third-party software to support this integration. Which approach will limit the access of the third party software to only the Amazon S3 bucket named “companybackup”?

- D. A custom IAM user policy limited to the Amazon S3 API in “company-backup”.

- https://aws.amazon.com/blogs/security/iam-policies-and-bucket-policies-and-acls-oh-my-controlling-access-to-s3-resources/

- If you’re still unsure of which to use, consider which audit question is most important to you:

- If you’re more interested in “What can this user do in AWS?” then IAM policies are probably the way to go. You can easily answer this by looking up an IAM user and then examining their IAM policies to see what rights they have.

- If you’re more interested in “Who can access this S3 bucket?” then S3 bucket policies will likely suit you better. You can easily answer this by looking up a bucket and examining the bucket policy.

101. A client application requires operating system privileges on a relational database server. What is an appropriate configuration for a highly available database architecture?

- D. Amazon EC2 instances in a replication configuration utilizing two different Availability Zones

- The question never asks fro RDS. It just says a relational database server, which can be mysql on its own not hosted with AWS.

102. What is a placement group?

B. A feature that enables EC2 instances to interact with each other via high bandwidth, low latency connections

- B. A feature that enables EC2 instances to interact with each other via high bandwidth, low latency connections

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/placement-groups.html

- A placement group is a logical grouping of instances within a single Availability Zone. Placement groups are recommended for applications that benefit from low network latency, high network throughput, or both. To provide the lowest latency, and the highest packet-per-second network performance for your placement group, choose an instance type that supports enhanced networking.

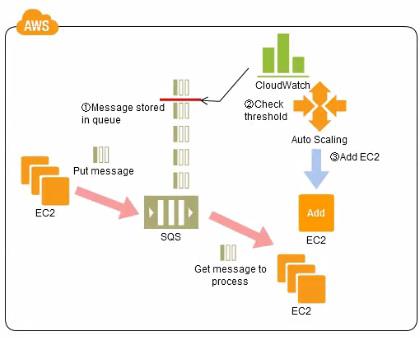

103. A company has a workflow that sends video files from their on-premise system to AWS for transcoding. They use EC2 worker instances that pull transcoding jobs from SQS. Why is SQS an appropriate service for this scenario?

- D. SQS helps to facilitate horizontal scaling of encoding tasks.

- http://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/throughput.html

- Because you access Amazon SQS through an HTTP request-response protocol, the request latency (the time interval between initiating a request and receiving a response) limits the throughput that you can achieve from a single thread over a single connection. For example, if the latency from an Amazon Elastic Compute Cloud (Amazon EC2) based client to Amazon SQS in the same region averages around 20 ms, the maximum throughput from a single thread over a single connection will average 50 operations per second.

104. When creation of an EBS snapshot is initiated, but not completed, the EBS volume:

- A. Can be used while the snapshot is in progress.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ebs-creating-snapshot.html

- Snapshots occur asynchronously; the point-in-time snapshot is created immediately, but the status of the snapshot is

pendinguntil the snapshot is complete (when all of the modified blocks have been transferred to Amazon S3), which can take several hours for large initial snapshots or subsequent snapshots where many blocks have changed. While it is completing, an in-progress snapshot is not affected by ongoing reads and writes to the volume.

105. What are characteristics of Amazon S3? Choose 2 answers

- C. S3 allows you to store unlimited amounts of data.

- E. Objects are directly accessible via a URL.

- https://aws.amazon.com/s3/faqs/

- Q: How much data can I store?The total volume of data and number of objects you can store are unlimited. Individual Amazon S3 objects can range in size from a minimum of 0 bytes to a maximum of 5 terabytes. The largest object that can be uploaded in a single PUT is 5 gigabytes. For objects larger than 100 megabytes, customers should consider using the Multipart Upload capability.

- Object Size Limit Now 5 TB

- http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingBucket.html

- Amazon S3 supports both virtual-hosted–style and path-style URLs to access a bucket.

106. Per the AWS Acceptable Use Policy, penetration testing of EC2 instances:

- D. May be performed by the customer on their own instances with prior authorization from AWS.

- https://aws.amazon.com/security/penetration-testing/

- Our Acceptable Use Policy describes permitted and prohibited behavior on AWS and includes descriptions of prohibited security violations and network abuse. However, because penetration testing and other simulated events are frequently indistinguishable from these activities, we have established a policy for customers to request permission to conduct penetration tests and vulnerability scans to or originating from the AWS environment.

107. You are working with a customer who has 10 TB of archival data that they want to migrate to Amazon Glacier. The customer has a 1-Mbps connection to the Internet. Which service or feature provides the fastest method of getting the data into Amazon Glacier?

- D. AWS Import/Export

- http://docs.aws.amazon.com/amazonglacier/latest/dev/uploading-an-archive.html

- AWS Import/Export accelerates moving large amounts of data into and out of AWS using portable storage devices for transport. AWS transfers your data directly onto and off of storage devices using Amazon’s high-speed internal network, bypassing the Internet.

108. How can you secure data at rest on an EBS volume?

- E. Use an encrypted file system on top of the EBS volume.

- https://d0.awsstatic.com/whitepapers/AWS_Securing_Data_at_Rest_with_Encryption.pdf

- Encryption in EBS:

- Each of these operates below the file system layer using kernel space device drivers to perform encryption and decryption of data.

- Another option would be to use file system-level encryption, which works by stacking an encrypted file system on top of an existing file system.

109. Which approach below provides the least impact to provisioned throughput on the “Product” table?

D. Store the images in Amazon S3 and add an S3 URL pointer to the “Product” table item

for each image

for each image

Yizhe why????

110. A customer needs to capture all client connection information from their load balancer every five minutes. The company wants to use this data for analyzing traffic patterns and troubleshooting their applications. Which of the following options meets the customer requirements?

- B. Enable access logs on the load balancer.

- http://docs.aws.amazon.com/elasticloadbalancing/latest/classic/access-log-collection.html

- Elastic Load Balancing access logs,

The access logs for Elastic Load Balancing capture detailed information for requests made to your load balancer and stores them as log files in the Amazon S3 bucket that you specify. Each log contains details such as the time a request was received, the client’s IP address, latencies, request path, and server responses. You can use these access logs to analyze traffic patterns and to troubleshoot your back-end applications.

111. If you want to launch Amazon Elastic Compute Cloud (EC2) instances and assign each instance a predetermined private IP address you should:

- C. Launch the instances in the Amazon Virtual Private Cloud (VPC).

- http://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/vpc-ip-addressing.html

112. You need to configure an Amazon S3 bucket to serve static assets for your public-facing web application. Which methods ensure that all objects uploaded to the bucket are set to public read? Choose 2 answers

- A. Set permissions on the object to public read during upload.

- C. Configure the bucket policy to set all objects to public read.

- http://docs.aws.amazon.com/AmazonS3/latest/UG/UploadingObjectsintoAmazonS3.html

- Set permission during upload

- You can use ACLs to grant permissions to individual AWS accounts; however, it is strongly recommended that you do not grant public access to your bucket using an ACL.

- http://docs.aws.amazon.com/AmazonS3/latest/dev/example-bucket-policies.html

- Configure bucket policy to set permissions

113. Can I use Provisioned IOPS with RDS?

- D. Yes for all RDS instances

- http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_Storage.html

- Amazon RDS provides three storage types: magnetic, General Purpose (SSD), and Provisioned IOPS (input/output operations per second).

114. A company is storing data on Amazon Simple Storage Service (S3). The company’s security policy mandates that data is encrypted at rest. Which of the following methods can achieve this? Choose 3 answers

- A. Use Amazon S3 server-side encryption with AWS Key Management Service managed keys.

- B. Use Amazon S3 server-side encryption with customer-provided keys.

- E. Encrypt the data on the client-side before ingesting to Amazon S3 using their own master key.

- http://docs.aws.amazon.com/AmazonS3/latest/dev/serv-side-encryption.html

- Use Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3)

- Use Server-Side Encryption with AWS KMS-Managed Keys (SSE-KMS)

- Use Server-Side Encryption with Customer-Provided Keys (SSE-C)

- http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingClientSideEncryption.html

- The Amazon S3 encryption client locally generates a one-time-use symmetric key (also known as a data encryption key or data key). It uses this data key to encrypt the data of a single S3 object (for each object, the client generates a separate data key).

- The client encrypts the data encryption key using the master key you provide.The client uploads the encrypted data key and its material description as part of the object metadata. The material description helps the client later determine which client-side master key to use for decryption (when you download the object, the client decrypts it).

- The client then uploads the encrypted data to Amazon S3 and also saves the encrypted data key as object metadata (

x-amz-meta-x-amz-key) in Amazon S3 by default.

115. Which procedure for backing up a relational database on EC2 that is using a set of RAlDed EBS volumes for storage minimizes the time during which the database cannot be written to and results in a consistent backup?

A. 1. Detach EBS volumes, 2. Start EBS snapshot of volumes, 3. Re-attach EBS volumes

B. 1. Stop the EC2 Instance. 2. Snapshot the EBS volumes

C. 1. Suspend disk I/O, 2. Create an image of the EC2 Instance, 3. Resume disk I/O

D. 1. Suspend disk I/O, 2. Start EBS snapshot of volumes, 3. Resume disk I/O

E. 1. Suspend disk I/O, 2. Start EBS snapshot of volumes, 3. Wait for snapshots to complete, 4. Resume disk I/O

Mostly, it’s D. per cloud guru.

116. After creating a new IAM user which of the following must be done before they can successfully make API calls?

- D. Create a set of Access Keys for the user.

- http://docs.aws.amazon.com/IAM/latest/UserGuide/Using_SettingUpUser.html

Programmatic access: If the user needs to make API calls or use the AWS CLI or the Tools for Windows PowerShell, create an access key (an access key ID and a secret access key) for that user.

117. Which of the following are valid statements about Amazon S3? Choose 2 answers

- C. A successful response to a PUT request only occurs when a complete object is saved.

- E. S3 provides eventual consistency for overwrite PUTS and DELETES.

- http://docs.aws.amazon.com/AmazonS3/latest/dev/Introduction.html#ConsistencyModel

118. You are configuring your company’s application to use Auto Scaling and need to move user state information. Which of the following AWS services provides a shared data store with durability and low latency?

- D. Amazon DynamoDB

- https://aws.amazon.com/dynamodb/faqs/

- Q: When should I use Amazon DynamoDB vs Amazon S3?

- Amazon DynamoDB stores structured data, indexed by primary key, and allows low latency read and write access to items ranging from 1 byte up to 400KB. Amazon S3 stores unstructured blobs and suited for storing large objects up to 5 TB. In order to optimize your costs across AWS services, large objects or infrequently accessed data sets should be stored in Amazon S3, while smaller data elements or file pointers (possibly to Amazon S3 objects) are best saved in Amazon DynamoDB.

- Q: How does Amazon DynamoDB achieve high uptime and durability?

- To achieve high uptime and durability, Amazon DynamoDB synchronously replicates data across three facilities within an AWS Region.

119. Which features can be used to restrict access to data in S3? Choose 2 answers

- A. Set an S3 ACL on the bucket or the object.

- C. Set an S3 bucket policy.

- http://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/private-content-restricting-access-to-s3.html#private-content-origin-access-identity-signature-version-4

- Updating Amazon S3 Bucket Policies

- Updating Amazon S3 ACLs

120. Which of the following are characteristics of a reserved instance? Choose 3 answers

- A. It can be migrated across Availability Zones

- B. It is specific to an Amazon Machine Image (AMI)

- C. It can be applied to instances launched by Auto Scaling

- D. It is specific to an instance Type (When you purchase, you must select an instance type, )

- E. It can be used to lower Total Cost of Ownership (TCO) of a system

- You can use Auto Scaling or other AWS services to launch the On-Demand Instances that use your Reserved Instance benefits.

- https://media.amazonwebservices.com/AWS_TCO_Web_Applications.pdf

- When you are comparing TCO, we highly recommend that you use the Reserved Instance (RI) pricing option in your calculations.

121. Which Amazon Elastic Compute Cloud feature can you query from within the instance to access instance properties?

- C. Instance metadata

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-instance-metadata.html#instancedata-data-retrieval

- Instance Metadata and User Data

122. Which of the following requires a custom CloudWatch metric to monitor?

- A. Memory Utilization of an EC2 instance

- http://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/ec2-metricscollected.html

123. You are tasked with setting up a Linux bastion host for access to Amazon EC2 instances running in your VPC. Only clients connecting from the corporate external public IP address 72.34.51.100 should have SSH access to the host. Which option will meet the customer requirement?

- A. Security Group Inbound Rule: Protocol – TCP. Port Range – 22, Source 72.34.51.100/32

124. A customer needs corporate IT governance and cost oversight of all AWS resources consumed by its divisions. The divisions want to maintain administrative control of the discrete AWS resources they consume and keep those resources separate from the resources of other divisions. Which of the following options, when used together will support the autonomy/control of divisions while enabling corporate IT to maintain governance and cost oversight?

Choose 2 answers

- D. Use AWS Consolidated Billing to link the divisions’ accounts to a parent corporate account.

- E. Write all child AWS CloudTrail and Amazon CloudWatch logs to each child account’s Amazon S3 ‘Log’ bucket.

- Consolidated Billing feature to consolidate payment for multiple Amazon Web Services (AWS) accounts or multiple Amazon International Services Pvt. Ltd (AISPL) accounts within your organization by designating one of them to be the payer account. With Consolidated Billing, you can see a combined view of AWS charges incurred by all accounts, as well as get a cost report for each individual account associated with your payer account. Consolidated Billing is offered at no additional charge. AWS and AISPL accounts cannot be consolidated together.

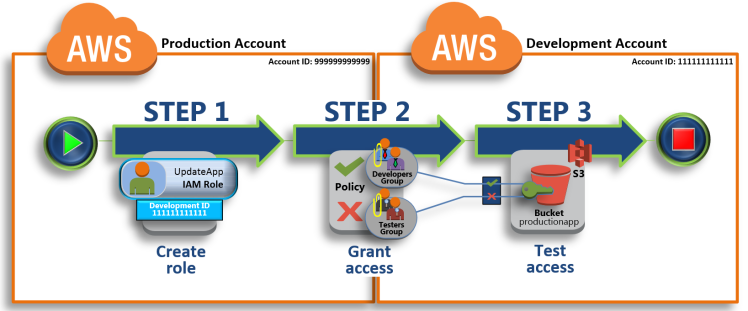

- across-access IAM account :This tutorial teaches you how to use a role to delegate access to resources that are in different AWS accounts that you own (Production and Development). You share resources in one account with users in a different account. By setting up cross-account access in this way, you don’t need to create individual IAM users in each account. In addition, users don’t have to sign out of one account and sign into another in order to access resources that are in different AWS accounts. After configuring the role, you see how to use the role from the AWS Management Console, the AWS CLI, and the API.

- C. Create separate VPCs for each division within the corporate IT AWS account : it does not work because all VPCs are under a single AWS Account. So the divisions are not separated !

125. You run an ad-supported photo sharing website using S3 to serve photos to visitors of your site. At some point you find out that other sites have been linking to the photos on your site, causing loss to your business. What is an effective method to mitigate this?

- A. Remove public read access and use signed URLs with expiry dates.

- http://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/private-content-signed-urls.html

- A signed URL includes additional information, for example, an expiration date and time, that gives you more control over access to your content. This additional information appears in a policy statement, which is based on either a canned policy or a custom policy. The differences between canned and custom policies are explained in the next two sections.

- Note

- You can create some signed URLs using canned policies and create some signed URLs using custom policies for the same distribution.

126. You are working with a customer who is using Chef configuration management in their data center. Which service is designed to let the customer leverage existing Chef recipes in AWS?

- D. AWS OpsWorks

- https://aws.amazon.com/opsworks/

- AWS OpsWorks is a configuration management service that uses Chef, an automation platform that treats server configurations as code. OpsWorks uses Chef to automate how servers are configured, deployed, and managed across your Amazon Elastic Compute Cloud (Amazon EC2) instances or on-premises compute environments. OpsWorks has two offerings, AWS Opsworks for Chef Automate, and AWS OpsWorks Stacks.

127. An Auto-Scaling group spans 3 AZs and currently has 4 running EC2 instances. When Auto Scaling needs to terminate an EC2 instance by default, AutoScaling will: Choose 2 answers

- C. Send an SNS notification, if configured to do so.

- D. Terminate an instance in the AZ which currently has 2 running EC2 instances.

- http://docs.aws.amazon.com/autoscaling/latest/userguide/lifecycle-hooks.html

- You can use Amazon SNS to set up a notification target to receive notifications when a lifecycle action occurs.

- http://docs.aws.amazon.com/autoscaling/latest/userguide/as-instance-termination.html

- Default Termination policy

- Auto Scaling determines whether there are instances in multiple Availability Zones. If so, it selects the Availability Zone with the most instances and at least one instance that is not protected from scale in. If there is more than one Availability Zone with this number of instances, Auto Scaling selects the Availability Zone with the instances that use the oldest launch configuration.

- Auto Scaling determines which unprotected instances in the selected Availability Zone use the oldest launch configuration. If there is one such instance, it terminates it.

- If there are multiple instances that use the oldest launch configuration, Auto Scaling determines which unprotected instances are closest to the next billing hour. (This helps you maximize the use of your EC2 instances while minimizing the number of hours you are billed for Amazon EC2 usage.) If there is one such instance, Auto Scaling terminates it.

- If there is more than one unprotected instance closest to the next billing hour, Auto Scaling selects one of these instances at random.

128. When an EC2 instance that is backed by an S3-based AMI is terminated, what happens to the data on the root volume?

- D. Data is automatically deleted.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/RootDeviceStorage.html

- Any data on the instance store volumes persists as long as the instance is running, but this data is deleted when the instance is terminated (instance store-backed instances do not support the Stopaction) or if it fails (such as if an underlying drive has issues).

129. In order to optimize performance for a compute cluster that requires low inter-node latency, which of the following feature should you use?

- D. Placement Groups

130. You have an environment that consists of a public subnet using Amazon VPC and 3 instances that are running in this subnet. These three instances can successfully communicate with other hosts on the Internet. You launch a fourth instance in the same subnet, using the same AMI and security group configuration you used for

the others, but find that this instance cannot be accessed from the internet. What should you do to enable Internet access?

- B. Assign an Elastic IP address to the fourth instance.

131. You have a distributed application that periodically processes large volumes of data across multiple Amazon EC2 Instances. The application is designed to recover gracefully from Amazon EC2 instance failures. You are required to accomplish this task in the most cost-effective way. Which of the following will meet your requirements?

- A. Spot Instances

- Looking at 2 key words in the question “most cost-effective way” and “recover gracefully”. Anytime you see “most cost-effective way” immediately think SPOT, then to confirm if it should be spot, check if it can recover as spot instances are pulled out anytime.

- http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/spot-interruptions.html

- The following are the possible reasons that Amazon EC2 will terminate your Spot instances:

- Price—The Spot price is greater than your bid price.

- Capacity—If there are not enough unused EC2 instances to meet the demand for Spot instances, Amazon EC2 terminates Spot instances, starting with those instances with the lowest bid prices. If there are several Spot instances with the same bid price, the order in which the instances are terminated is determined at random.

- Constraints—If your request includes a constraint such as a launch group or an Availability Zone group, these Spot instances are terminated as a group when the constraint can no longer be met.

132. Which of the following are true regarding AWS CloudTrail? Choose 3 answers

- A. CloudTrail is enabled globally

- http://docs.aws.amazon.com/awscloudtrail/latest/userguide/cloudtrail-concepts.html: A trail can be applied to all regions or a single region. As a best practice, create a trail that applies to all regions in the AWS partitionin which you are working. This is the default setting when you create a trail in the CloudTrail console.

- B. CloudTrail is enabled by default

- C. CloudTrail is enabled on a per-region basis

- C:have multiple single region trails.

- D. CloudTrail is enabled on a per-service basis. You cannot choose service to trail

- You cannot enable CloudTrail per service

- E. Logs can be delivered to a single Amazon S3 bucket for aggregation.

- FAQ: You can configure one S3 bucket as the destination for multiple accounts. For detailed instructions, refer to aggregating log files to a single Amazon S3 bucket section of the AWS CloudTrail User Guide

- F. CloudTrail is enabled for all available services within a region.

- FAQ: Q: What services are supported by CloudTrail?

- AWS CloudTrail records API activity and service events from most AWS services. For the list of supported services, see CloudTrail Supported Services in the CloudTrail User Guide.

- G. Logs can only be processed and delivered to the region in which they are generated.

133. You have a content management system running on an Amazon EC2 instance that is approaching 100% CPU utilization. Which option will reduce load on the Amazon EC2 instance?

- B. Create a CloudFront distribution, and configure the Amazon EC2 instance as the origin

- https://aws.amazon.com/cloudfront/faqs/

- Q. How does Amazon CloudFront lower my costs to distribute content over the Internet?

- Like other AWS services, Amazon CloudFront has no minimum commitments and charges you only for what you use. Compared to self-hosting, Amazon CloudFront spares you from the expense and complexity of operating a network of cache servers in multiple sites across the internet and eliminates the need to over-provision capacity in order to serve potential spikes in traffic. Amazon CloudFront also uses techniques such as collapsing simultaneous viewer requests at an edge location for the same file into a single request to your origin server. This reduces the load on your origin servers reducing the need to scale your origin infrastructure, which can bring you further cost savings.

- Additionally, if you are using an AWS origin (e.g., Amazon S3, Amazon EC2, etc.), effective December 1, 2014, we are no longer charging for AWS data transfer out to Amazon CloudFront. This applies to data transfer from all AWS regions to all global CloudFront edge locations.

134. You have a load balancer configured for VPC, and all back-end Amazon EC2 instances are in service. However, your web browser times out when connecting to the load balancer’s DNS name. Which options are probable causes of this behavior? Choose 2 answers

- A. The load balancer was not configured to use a public subnet with an Internet gateway configured

- C. The security groups or network ACLs are not property configured for web traffic.

135. A company needs to deploy services to an AWS region which they have not previously used. The company currently has an AWS identity and Access Management (IAM) role for the Amazon EC2 instances, which permits the instance to have access to Amazon DynamoDB. The company wants their EC2 instances in the new region to have the same privileges. How should the company achieve this?

- B. Assign the existing IAM role to the Amazon EC2 instances in the new region